Update: This hybrid lab box has been transformed to physical Nexenta build now.

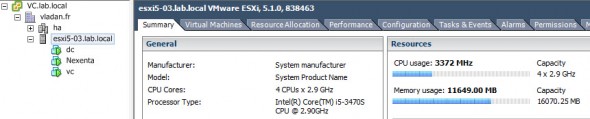

This mini box runs now for a couple of days (weeks) in my lab, where I run a DC and vCenter out from a local storage, plus the Nexenta VSA which had been setup with RDMs to mix of SSDs and SATA disks. The SATA disks are put into RAID 0 (no redundancy) to obtain the best performance.

The HA cluster above runs two other ESXi hosts that were already present in my lab.

When I start the box in the morning (because it does not run 7/7), the only problem I'm having, iSCSI target does not mount automatically. Each time I have to rescan the ESXi iSCSI software initiator to find the target. Even if the Nexenta VSA is configured to start automatically with the host as first VM. There might be a “work around” I'm pretty sure, but haven't looked at it yet.

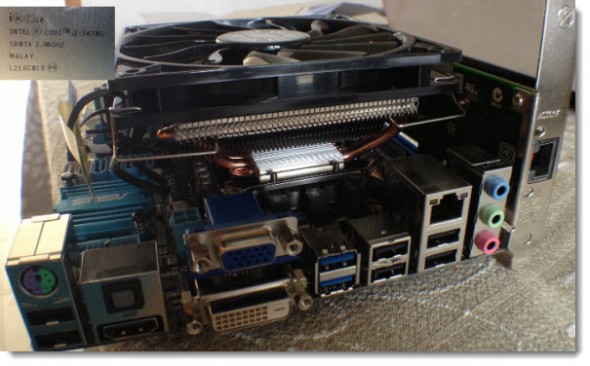

The details of the hardware setup, disks, and pieces used in this “hybrid” approach for this MiniBox with 16Gb of RAM, can be found in those posts:

For testing I based myself on the performance test I used in my old Nas box. There is a preconfigured ISO image available at here: https://vmktree.org/iometer/ together with the video instructions.

Basically I just took an XP VM, created and attached new virtual disk (10Gb of size) formated eagezerothick. The tests were run on an iSCSI Lun created on the Nexenta VSA. There is single 1GbE NIC configured for the iSCSI or NFS. The other NIC is for the management network, so the only traffic going through this NIC is the storage traffic.

Mini-ITX Hybrid ESXi Whitebox – The performance tests

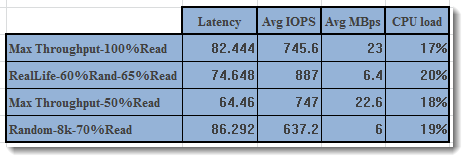

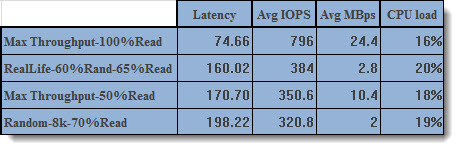

As concerning the performance results, I've done 5 tests to get an average result, which are correct. The tests were done first at the iSCSI LUN, and then on an nfs mount, with the same VM. During the tests, there were no other VMs running on that (virtual) datastore.

The iSCSI LUN here are the results – the average of 5 runs:

And the results from the performances on the NFS are here (exactly the same VM with exactly the same tests):

Wrap up:

The results shows better iSCSI performance, even if there is some good performance on NFS, especially on the max throughput test with 100% read. The real life test shows about twice as more iOPS on iSCSI than on NFS. Despite the The spinning SATA drivers which are the slowest element, the box certainly provide a good value for the money. No doubt about it.

Advantage of this mini box is evidently the small factor size, which at the same time is a limiting factor. No possible evolution, except for replacing the SATA drives with several SSDs.. Concerning the RAM, it's maxed at 16 Gb, which is enough to run a management cluster and the VSA at the same time.

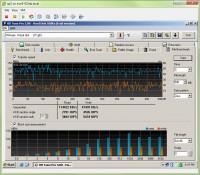

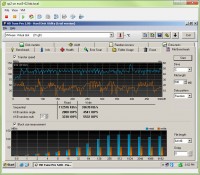

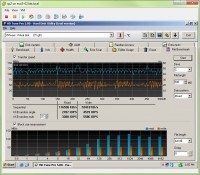

Update: Here are some more photos from running HD Tune Pro trial in the VM. Note the IOPs colons in the first two pics…

|

|

|

|

|

|

The future is definitely full flash, and as for myself, I quit buying spinning disks…. Thanks for reading ESX Virtualizataion. Follow via Twitter or RSS

The whole article series:

- Homelab Nexenta on ESXi – as a VM

- Building NAS Box With SoftNAS

- Performance Tests of my Mini-ITX Hybrid ESXi Whitebox and Storage with Nexenta VSA (This article)

- Fix 3 Warning Messages when deploying ESXi hosts in a lab

Is that the best network performance you can get out of the VSA ? I run the VSA in a small production system at my company (maybe 300GB of written(post-dedupe) data, of which less than 30GB of it is fairly critical – I didn’t think it was worth while to have a high grade NAS with only a few dozen gigs on it so I went with Nexenta VSA). The performance of it is not great though. I believe because at least in part due to the NIC drivers. But I too get roughly 20MB/s (+/-) over NFS — I have no need for iSCSI on Nexenta. Back end storage is 3PAR (shared of course with the rest of the vmware environment) with raw device maps. Network is all 10GbE.

It’s not critical – as the use cases I have here are trivial. I used to do clustering with SCSI bus sharing but that didn’t work out(2 or 3 major outages with full data loss), so I disabled that last year(no issues since).

Just wondering…

Which network drivers are you using? The more performant vmxnet3 is supported with the latest release, even if I haven’t tested those yet in my VSA installation. It’s on my TO_DO list….

The best performance, IMHO, still, is to have dedicated box for it. Storage, and especially performance tweaking, can get quite complex, but if you see the bottleneck only on the network side, then the network drivers might solve it.

It’s simple to explain those result by default NFS is synchronous so for each write you have to wait for an ack. That’s why you must put ssd as SLOG on your nexenta box.

By the way by default ISCSI is asynchronous so after the data are put on RAM he sends the ACK but if you have a power loss you will loose all data on the ram.

That’s why normally you should put the sync parameter to always and when you configure your scsi lun you should disable write back cache.

If you do that without adding SLOG you will have poor performance with SCSI too but your data will be safe