Homelab Nexenta on ESXi. In the article I wrote few days back I was building a NAS box for my lab and was going to use it not only as a pure storage box, but also as an ESXi host. At the same time I was going to explore the ZFS possibilities with L2ARC and ZIL caching on SSDs. Unfortunately I wasn't able to use it SoftNAS since its only possible in paid version, which is destined for enterprise market. You can get 7/7 support as well. Test the SoftNAS solution during 60 days, worth to try, this new startup company provides rather good and polished solution, based around hardened CentOS release. It's provided as VSA. But I'm moving on and testing Nexenta VSA running on ESXi.

Update: The Nexenta is now installed not as a VSA but on bare metal, as I built a new whitebox with Haswell CPU, so I have 3 ESXi Whiteboxes + the dedicated storage where I run Nexenta. It's a test bed, so at the moment it's populated with single Samsung 840 Pro 512Gb SATAIII SSD.

So in my lab I decided to try a Nexenta which do allows to pin the disks to whichever function you need when creating your pool. As I wrote in my previous article, I just bought some parts that could fit in my rather smaller format case, and recovered the parts I used in my previous build. The board I used – P8H77-I – can actually do the passthrough, but there is PSOD when rebooting the box. So after testing nexenta on bare metal (running sweet with the local SATA controler), I thought that the box can definitely do anything else than just serving my shared storage… -:)

So in my lab I decided to try a Nexenta which do allows to pin the disks to whichever function you need when creating your pool. As I wrote in my previous article, I just bought some parts that could fit in my rather smaller format case, and recovered the parts I used in my previous build. The board I used – P8H77-I – can actually do the passthrough, but there is PSOD when rebooting the box. So after testing nexenta on bare metal (running sweet with the local SATA controler), I thought that the box can definitely do anything else than just serving my shared storage… -:)

So I ended up using RDMs for the local attached disks. This setup can be useful to people having larger than 2TB hard drives, which aren't currently supported in vSphere.

This hybrid (ESXi + NAS) setup with RDMs got inspired by fellow blogger Marco Broeken, that has done with his homelab with a Low Power Nexenta box (with core i3 CPU), but he's using an expansion card IBM serveRAID M1015 (needs to be flashed into IT Mode).

My Homelab Nexenta on ESXi – NAS setup:

The Asus P8H77-I board has 6 SATA ports and I used them to plug in the disks I had:

- 1 SSD 128 Gb for ZIL

- 1 SSD 256 Gb for L2ARC

- 1 SSD 64 Gb for local storage of the ESXi host

- 2 SATA 500Gb drives in raid0 (NO REDUNDANCY – I know. Could do Raid 10 if I had more space and disks)

The PCI-E slot is used for an additional NIC card which is used for the storage traffic. The onboard Realtec NIC is being recognized by the latest ESXi 5.1 release without problem.

Being fairly limited with the mobo does not gives you many options to play with. The PCI-Express slot had to be used for an additional NIC (ro multi port NIC) and that's it. No other expansion card can fit in… Only by running Nexenta on bare metal you could slot in an additional with additional SATA/SAS ports which could became available for the setup. Be aware of the small space inside of the box.

In fact I'd like to document here the things I've done and things that I had to dig through a bit.

Homelab Nexenta on ESXi – How to create RDM VMware ESXi:

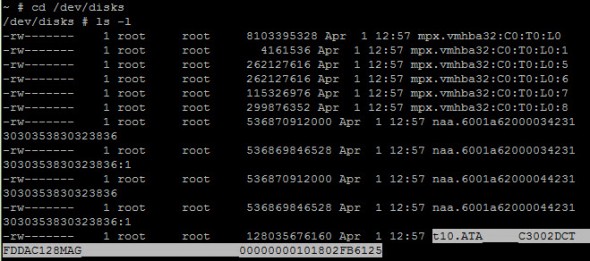

After connecting via SSH to your ESXi host, run this command command ls /dev/disks/ -l

You'll see a view like this one. Only the lines starting with t10. interests you, not the vml files.

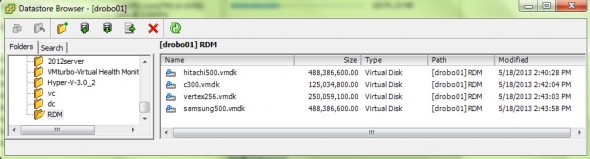

Now go and use vmkfstools -z to create a vmdk. As a destination you need to specify a datastore to hold the VMDK mapping file. I used my local datastore where I'm running the Nexenta VM from. Click to enlarge.

vmkfstools -z /vmfs/devices/disks/<name of the physical disk> <destination for storing the VMDK mapping files/<name_of_the_RDM_file>.vmdk

RDM is only another VMDK, but rather pointing to a flat.vmdk file for a normally virtual disk, the VMDK points to physical device (in my case the SATA drive). Repeat those steps for each of your physical drives that you want to map.

The size indicated some big file, but in fact those files aren't that big… they have few kilos only

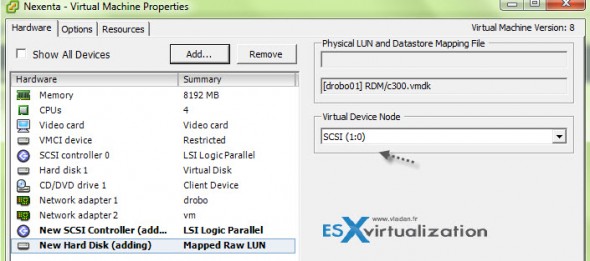

So when you adding disk to your Nexenta VM, use Add Disk > Existing virtual disk > browse to find the rdm vmdk files you just created.

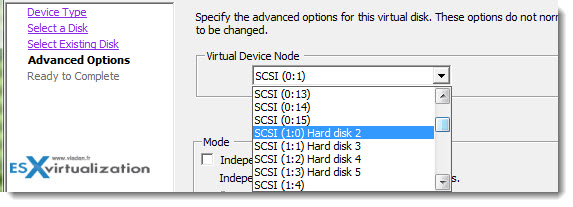

Pick up the SCSI 1:0, instead of the O:x… SCSI to give the RDMs a separate SCSI virtual adapter. See the image bellow…

How to install VMware Tools in the Nexenta VM

When you pick up the latest Nexenta Community ISO, you choose the Solaris 10 (x64) as stated in the installation guide. Ok, but when you want to install VMware tools you end up with error. The VM tools installation package is complaining about the missing SUNWini8 package missing, and ends up with an error.

What's needed for installation of VMware Tools, is to:

# cd /tmp

# tar xzf /media/VMware\ Tools/vmware-solaris-tools.tar.gz

# cd vmware-tools-distrib

# nano bin/vmware-config-tools.pl

Now, do a CRTL + W to find the SUNWuiu8 package and comment it!

Then run:

# ./vmware-install.pl

I hope this post helps to people willing to build multipurpose homelab box which takes minimal space and isn't noisy (has only 2 spinning disks after all…).

The Networking:

The Nexenta VM has two network interfaces one for management and the other for the nfs/iSCSI traffic. I picked up the default E1000 driver. There might be some optimizations to do by changing the driver into the more performant VMXNET3 driver, and also to use Jumbo Frames with MTU 9000 within the storage network, but I want to do some testing first.

The actual Nexenta setup will be documented in a separate blog post. Stay tuned via RSS, for more. If you haven't already done, check out my previous post where I was building the NAS box and testing SoftNAS as a shared storage.

The whole serie:

- Building NAS Box With SoftNAS

- Homelab Nexenta on ESXi – as a VM (This post)

- Fix 3 Warning Messages when deploying ESXi hosts in a lab

- Performance Tests of my Mini-ITX Hybrid ESXi Whitebox and Storage with Nexenta VSA

Yeah I know.

I can put it on dropbox if you need it

Thanks for the mention!

Did you know you can download pre build virtual appliance with VMXNET3 driver installed!

Hi Marco,

No pb.. I deployed the Enterprise VSA, but it needs a trial serial for it! The community SN don’t work. And, the community edition site being down, I wasn’t able to get the VSA.

Great fun.

Funny, I was on the same project this week when I saw your softnas blog and I was dismayed when I found out it is not free. I went to Nexenta site to get to the prebuilt vm community version and I observed the same thing, website is down. Fastforward this morning reading this blog post. The only thing I want to add that is worth mentioning http://www.napp-it.org/ is another option.

Well, Nexenta is not free either, when you want the ++ and support.. -:). That’s normal. But the functionnality I was seeking in SoftNAS Free version just did not satisfied my needs for homelab. That does not mean that they do a very good Professional version with replication capabilities.

Yeah, I’ve heard about Napp-it before, but it’s a little bit less knonw.

Thanks

I have tested nexenta for one year already and found the support community quite rubbish to be honest. Lots of performance problems and when I tried the enterprise version, nexenta simply didn’t give any support. How the hell can I try a software that doesn’t work properly with no single way of support? I don’t know how they want to convice me to spend £8k on something that doesn’t work.

I’ve also ried open indiana and solaris with napp-it, very nice idea but it simply is not reliable and straight forward to use. There is so much to be done around ZFS but no good and easy implementation of it yet. If you really want to play with ZFS, the old fashion command line is your best bet, with solaris or open indiana.

Shame there is still not even for solaris a graphical utility to play with ZFS like the big Sun storage boxes.

Best practice is to put ZIL drives on RAID1 devices and L2ARC on single, as a loss of data on ZIL drives result in a corrupted ZFS filesystem and there is virtually no use of it so 2x 16/32gb SLC drives is enough, while a loss of L2ARC makes only for slower reads, so one MLC 128/256 GB SSD (Samsung 840Pro recommended) is what you need.

Here is a usage report from my small Ubuntu 12.04 LTS + ZFS storage:

(edited – link dead).

http://pastebin.com/2qqWK3mrI know. The box is serving for tests only. I’m not storing anything important there. In addition all the VMs do have are backed up by Veeam to Drobo with Beyond Raid technology in it: http://www.vladan.fr/drobo-elite-iscsi-san-which-supports-multi-host-environments/

The Mixed ESXi/Nexenta box is what we say…. just a “test rig”.

I do think it’s a good practice to mirror your ZIL/SLOG devices just to absolutely minimize the chance of corruption, but losing a ZIL/SLOG device does not mean that you get a corrupted ZFS filesystem. This is because all the data on your ZIL device is always mirrored in ARC which lives in the server’s ram. Since Nexentastor will flush the ARC data to persistent storage every 5 seconds, you would have to lose your ZIL device and lose power to the server within 5 seconds of each other in order to have a corrupted ZFS volume.

The Samsung ssd’s aren’t officially supported by Nexenta yet, but we’ve talked to them about getting them certified. Have you had any trouble with the 840 Pro’s? How long have you had the 840 Pro in your system?

just wanted to say thanks for the system build information. i already had the same mainboard, but pleased to read that i just needed to swap the cpu out to get the vt-d features. i did this but damaged/repaired the pins on the mb.

Personally im going to try with a video card passthrough for a htpc desktop environment, and just nest the vm environment right up. ill have the host layer installed on a usb drive using sata passthrough for the nas drives, and then the rest on the next layer. I figure it should mean my environment can be trashed/reloaded without actually having to shift the data from the drives, if and when i need this rebuilt.

Hi Vladan,

Thanks for the article. One question: why nexenta and not freenas? I remember you had created a freenas-based nas before. Have you abandoned it and replaced it with nexenta?

Thanks,

Jose

Hi Jose,

I like to try several storage products in my lab, so there was FreeNAS, Openfiler, Nexenta…. but I can’t run all of them at the same time. Don’t have enough hardware. I certainly learned those quite a lot from all of those, and as it’s not a production environment I can afford the luxury to break things as needed. Homelab-as-a-service (HaaS). -:).