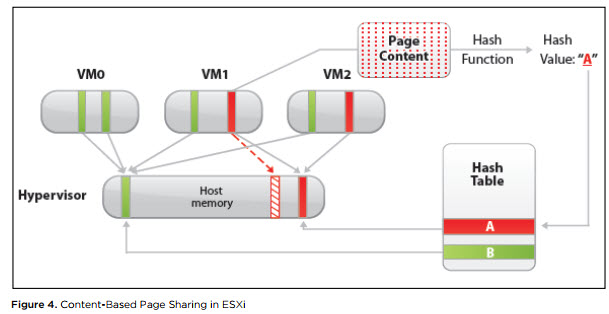

This post is another addition to our Tips category. Today we'll tackle another VMware vSphere Memory Management topic called VMware Transparent Page Sharing (TPS). VMware ESXi hypervisor is capable of tracking identical memory pages within VMs with the same OS. ESXi assigns a hash value to pages and compares them in details, bit by bit. So when ESXi finds identical pages on multiple VMs on a host, it can share them among VMs via pointers to the physical memory page.

Let's say, there are 30 copies of the same memory segment among different VMs with the same OS. ESXi keeps just one and the rest are just pointers.

It is the vmkernel who automatically identifies identical pages of virtual memory and consolidates them to a single page in physical memory. As a result, the total virtual machine host memory consumption is lowered and we can allocate more memory to our VMs (to overcommit even more). TPS works in the background, from time to time.

How VMware TPS works is demonstrated on this image from VMware PDF called Understanding Memory Resource Management in VMware vSphere.

Transparent Page Sharing. Salting or not salting? Things got a bit more complicated…

Starting vSphere 6, there is a notion of intra-VM TPS and inter-VM TPS. The intra-VM TPS is enabled by default and inter-VM TPS is disabled by default, due to some security concerns. (we'll see it in a min).

What is meant by Intra-VM and Inter-VM in the context of Transparent Page Sharing?

- Intra-VM – TPS will de-duplicate identical pages of memory within a virtual machine, but will not share the pages with any other virtual machines.

- Inter-VM – TPS will de-duplicate identical pages of memory within a virtual machine and will also share the duplicates with one or more other virtual machines with the same content.

The security which would be compromised is pretty much minimal:

Published academic papers have demonstrated that by forcing a flush and reload of cache memory, it is possible to measure memory timings to try and determine an AES encryption key in use on another virtual machine running on the same physical processor of the host server if Transparent Page Sharing is enabled between the two virtual machines. This technique works only in a highly controlled system configured in a non-standard way that VMware believes would not be recreated in a production environment.

And here is the change that they have done in order to make the infrastructure more secure, but finally less efficient (by default).

Statement from VMware:

Due to security concerns, inter-virtual machine transparent page sharing is disabled by default and page sharing is being restricted to intra-virtual machine memory sharing. This means page sharing does not occur across virtual machines and only occurs inside of a virtual machine. The concept of salting has been introduced to help address concerns system administrators may have the security implications of transparent page sharing. Salting can be used to allow more granular management of the virtual machines participating in transparent page sharing than was previously possible. With the new salting settings, virtual machines can share pages only if the salt value and contents of the pages are identical. A new host config option Mem.ShareForceSalting can be configured to enable or disable salting.

How can admin maximize the hardware investment?

By forcing the value of some advanced host config (will see it below). He (she) can disable the salting via an advanced host config.

But what are those settings and where to set them up?

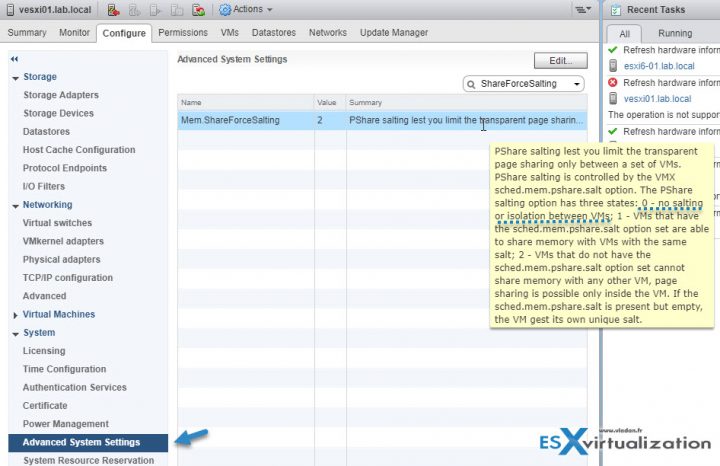

Things get more complicated here because there are two values to set. One at the host level (Mem.ShareForceSalting), the other one within a VMX file (sched.mem.pshare.salt) for concerned VMs…..

You must change the value on each individual host.

There can be 3 values of the Mem.ShareForceSalting

- 2 – Default value. No Intra-VM TPS. The sched.mem.pshare.salt (value in VMX file) is not present in the virtual machine configuration file, and so the virtual machine salt value is set to unique value.

- 1 – By default the salt value is taken from sched.mem.pshare.salt. If not specified, it falls back to old TPS (inter-VM) behavior by considering salt values for the virtual machine as 0

- 0 – Inter-VM TPS works as expected The value of VMX option sched.mem.pshare.salt is ignored even if present. We will use this option for our lab.

Connect via vSphere Web client > Select Host > Configure > System > Advanced System Settings.

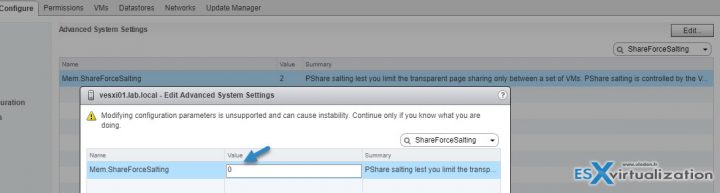

And then click the Edit button to enter the Mem.ShareForceSalting into the search box and filter all the other advanced settings. Then enter “0” into the field and validate by clicking the OK button.

You can also use a PowerCLI to change those values for VMware Transparent Page Sharing. There is a script attached to the VMware KB article we're using as a source for our post.

Now everything is done, until next patch or next upgrade, we're more efficient…..Or at least we're in the state where VMware has always been, until the change because of the security.

Transparent Page Sharing – One last step.

In order for this setting to be effective, you don't have to reboot the hosts, but either:

- Migrate all the virtual machines to another host in the cluster and then back to the original host. Or

- Shutdown and power-on the virtual machines.

You may want to disable large page settings as well as by default ESXi has it activated. Most modern guest operating systems use large pages, which cannot be shared by the vmkernel. But consider this:

Use of large pages can also change page sharing behavior. While ESXi ordinarily uses page sharing regardless of memory demands, it does not share large pages. Therefore with large pages, page sharing might not occur until memory overcommitment is high enough to require the large pages to be broken into small pages. For further information see VMware KB articles 1021095 and 1021896.

So you may want to have a look in my post before disabling large memory pages within your environment:

It should certainly help in VDI environments where you run all or most VDI desktops with the same OS.

VMware KB 2097593: Additional Transparent Page Sharing management capabilities and new default settings

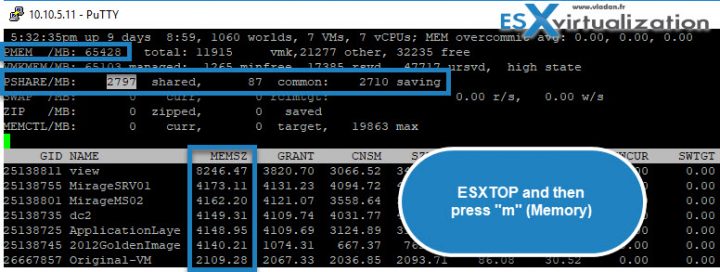

Update: Here is a view from my SSH session. Note that the host has 64Gigs of RAM and I'm not really overcommitting on memory in this particular case. Still, there are some memory pages shared, and some savings.

Wrap Up:

VMware Transparent Page Sharing is one of the 3 or four memory optimization techniques which exist. We have already talked about What is VMware Memory Ballooning? A Memory ballooning which uses a Balloon driver inside each VM (via a special module) to reclaim unused memory from within each VM and make it available again to ESXi host.

Other than those two we'll talk also memory compression and swap, which are the two less popular as they came in as a last resort, for hosts which are already very heavy over-committed.

More from ESX Virtualization:

- What is VMware Instant Clone Technology?

- What is VMware vMotion?

- What is VMware VMFS Locking Mechanism?

- What is VMware Snapshot?

- Three Ways To Determine VM Hardware Version on VMware vSphere

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)

How can you monitor that TPS is working correctly after enabling it and what the dedupe ratio is?

fire up SSH session > ESXTOP > hit “m” > check the values… I’ll put a screenshot to show that at the end of the post.