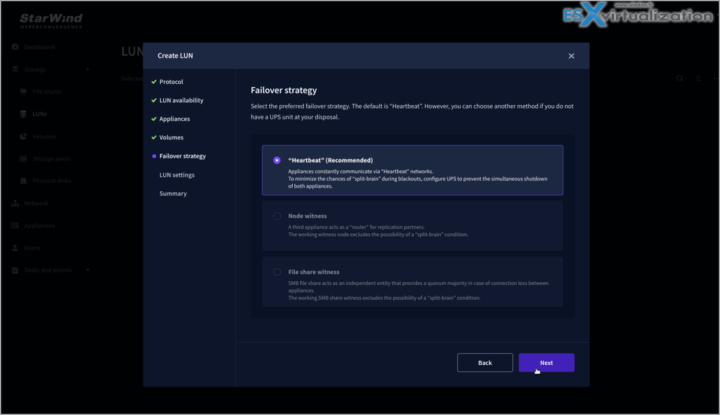

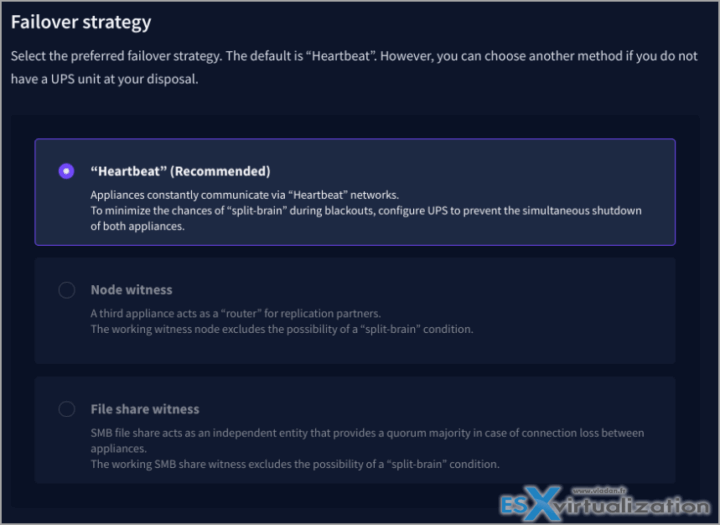

High availability (HA) protected workloads is something we want in production. Knowing that our workload will continue to be available (restarted) on remaining node is something we want to have. HA is not Fault tolerance so our workload will experience small downtime, before being restarted on another node in a cluster, but that's something we know and we can plan ahead. Today's post will discuss StarWind VSAN failover strategy which has in its latest version, three different possibilities – Heartbeat, Node Majority or File share witness.

Heartbeat is a technology that allows avoiding the so-called “split-brain” scenario when the HA cluster nodes are unable to synchronize but continue to accept write commands from the initiators independently. It can happen when all synchronization and heartbeat channels disconnect simultaneously, and the partner nodes do not respond to the node’s requests.

If at least one heartbeat link is online, StarWind services can communicate with each other via this link. The device with the lowest priority will be marked as not synchronized and get subsequently blocked for the further read and write operations until the synchronization channel resumption.

Note: With heartbeat failover strategy, the storage cluster will continue working with only one StarWind node available.

StarWind Virtual SAN (VSAN) offers two primary failover strategies to ensure high availability (HA) and prevent data corruption in a clustered environment: Heartbeat and Node Majority. The Node Majority strategy can be implemented with either a Node Witness or a File Share Witness to maintain quorum in a two-node setup. These strategies are selected during the creation of a Highly Available (HA) device (LUN) and cannot be changed afterward. Below is a detailed explanation of each strategy, based on the information available from StarWind’s documentation and related sources.

This strategy ensures synchronization connection without any additional heartbeat links. The failure-handling process occurs when the node has detected the absence of connection with the partner.

The main requirement for keeping the node operational is an active connection with more than a half of the HA device’s nodes. Calculation of the available partners is based on their “votes”.

In case of a two-node HA storage, all nodes will be disconnected if there is a problem on the node itself, or in communication between them. Therefore, the Node Majority failover strategy does not work in case of just two synchronous nodes. To resolve this issue, it is necessary to add the third Witness node, which participates the nodes count for the majority, but neither contains data on it nor is involved in processing clients’ requests.

The Witness node should be additionally configured for an HA device that uses Node Majority failover strategy if it is replicated between 2 nodes. In case an HA device is replicated between 3 nodes, no Witness node is required.”

StarWind Virtual SAN (VSAN) offers two primary failover strategies to ensure high availability (HA) and prevent data corruption in a clustered environment: Heartbeat and Node Majority. The Node Majority strategy can be implemented with either a Node Witness or a File Share Witness to maintain quorum in a two-node setup. These strategies are selected during the creation of a Highly Available (HA) device (LUN) and cannot be changed afterward. Below is a detailed explanation of each strategy, based on the information available from StarWind’s documentation and related sources.

1. Heartbeat Failover Strategy

The Heartbeat failover strategy is designed to prevent the “split-brain” scenario, where HA cluster nodes lose synchronization but continue to accept write commands independently, potentially leading to data corruption. Here’s how it works:

Mechanism: Heartbeat relies on additional communication channels (heartbeat links) to maintain connectivity between nodes.

If all synchronization and heartbeat channels fail simultaneously, and the partner node does not respond, StarWind assumes the partner is offline. The remaining node continues operations in single-node mode, using the data written to it.

If at least one heartbeat link remains active, StarWind services communicate via this link. The device on the node with the lowest priority is marked as “not synchronized” and blocked from further read/write operations until synchronization resumes. Meanwhile, the synchronized node flushes its cache to disk to preserve data integrity in case of an unexpected failure.

To enhance stability, StarWind recommends configuring multiple independent heartbeat channels (e.g., separate network interfaces) to reduce the risk of split-brain.

Key Features: No additional witness required: Heartbeat allows a two-node cluster to operate without a third witness node or file share, making it cost-effective for smaller setups.

Single-node operation: The cluster can continue functioning with only one node available, ensuring high availability even if one node fails.

Network requirements: Heartbeat requires robust network connectivity. StarWind recommends at least a 100 Mbps network for management/heartbeat and a 1 Gbps (preferably 10 Gbps) network for iSCSI/heartbeat to ensure reliable communication.

Use Case: Ideal for two-node clusters where simplicity and cost savings are priorities, and a third witness node or external file share is not feasible.

Suitable for environments where high availability is critical, and the infrastructure can support multiple heartbeat links to avoid split-brain scenarios.

Recommended to configure an uninterruptible power supply (UPS) to prevent simultaneous node shutdowns during power failures, as this could disrupt synchronization.

Configuration: During HA device creation, select the Heartbeat failover strategy in the StarWind Management Console or Web UI.

Specify the synchronization and heartbeat interfaces, ensuring they are on separate network adapters to avoid split-brain issues.

Example steps: Right-click a StarWind server, select “Add Device (advanced),” choose “Synchronous Two-Way Replication,” and select Heartbeat as the failover strategy.

Advantages:

- Simplifies deployment by not requiring additional hardware or external resources.

- Maintains cluster operation with a single node, enhancing availability.

- Reduces complexity in small-scale environments.

Limitations:

- Relies heavily on network reliability; loss of all heartbeat links can lead to single-node operation, increasing the risk of data divergence if not carefully managed.

- Requires careful network configuration to ensure heartbeat channels are independent and robust.

2. Node Majority Failover Strategy

The Node Majority failover strategy ensures cluster operation by requiring a majority of nodes (more than half) to remain connected to maintain quorum. This strategy is used when data integrity and strict synchronization are paramount, but it requires additional components in a two-node setup.

The cluster remains operational only if more than half of the HA device’s nodes are connected, determined by a voting system where each node has a “vote.”

In a two-node HA setup, a third component (either a Node Witness or File Share Witness) is required to achieve an odd number of votes, ensuring a majority can be established. Without this, both nodes would disconnect if communication is lost, as neither can achieve a majority. If a node loses connection with the majority, it stops processing client requests to prevent split-brain scenarios.

For a three-node HA setup, no additional witness is needed, as the three nodes naturally provide an odd number of votes, allowing the cluster to tolerate the failure of one node.

No heartbeat links required: Unlike the Heartbeat strategy, Node Majority relies solely on the voting mechanism, eliminating the need for additional heartbeat channels.

Tolerates single-node failure: In a two-node setup with a witness, or a three-node setup, the cluster can tolerate the failure of one node. However, if two nodes fail, the remaining node becomes unavailable to clients.

Synchronization journals: StarWind supports RAM-based (default) or disk-based journals to avoid full synchronization in certain scenarios. Disk-based journals should be placed on a separate, fast disk (e.g., SSD or NVMe) to maintain performance.

Use Case: Suitable for environments where strict data consistency is critical, and the infrastructure can support a third witness component or a three-node setup.

Ideal for larger or geographically distributed clusters where network reliability may vary, and a voting-based quorum is preferred.

Configuration: Select Node Majority as the failover strategy during HA device creation.

For a two-node setup, configure either a Node Witness or File Share Witness (see below for details).

Specify the partner node’s hostname, IP address, and port number, and select “Synchronous Two-Way Replication.”

Advantages:

- Eliminates the risk of split-brain entirely by requiring a majority vote, ensuring data consistency.

Does not rely on heartbeat links, simplifying network configuration in some scenarios. - Works well in three-node setups without additional configuration.

Limitations:

Requires a third component (Node Witness or File Share Witness) for two-node setups, increasing complexity and cost.

If two nodes fail in a three-node setup, or if the witness and one node fail in a two-node setup, the cluster becomes unavailable.

Node Witness

Description: A Node Witness is a third StarWind server that participates in the voting process for the Node Majority strategy but does not store user data or process client requests.

It ensures an odd number of votes in a two-node HA setup, allowing the cluster to maintain quorum if one node fails.

Configuration: Add a new StarWind server as the witness node in the StarWind Management Console (right-click Servers > Add Server).

For the HA device, open the Replication Manager, select “Add Replica,” choose “Witness Node,” and specify the witness node’s hostname or IP address (default port: 3261).

Configure the synchronization channel for the witness node, ensuring it is accessible from both primary nodes.

Example: In a two-node setup, the witness node is added to provide a third vote, ensuring the cluster remains operational if one node is lost.

Requirements:

- The witness node requires minimal resources, as it does not handle data or client requests.

- Network connectivity must be reliable, with at least a 1 Gbps link (10 Gbps recommended) for synchronization.

- The witness node must be a separate StarWind server, which can be a physical or virtual machine.

Use Case:

Preferred when a dedicated server or virtual machine is available to act as a witness, especially in environments with existing infrastructure to support it.

Suitable for organizations prioritizing data consistency and willing to invest in a third node.

Advantages:

- Ensures strict quorum without requiring external storage or complex network configurations.

- Integrates seamlessly with StarWind’s ecosystem.

Limitations:

- Adds hardware or virtual machine overhead, increasing costs compared to the Heartbeat strategy.

- Requires careful network planning to ensure the witness node remains accessible.

3. File Share Witness

A File Share Witness (FSW) is an SMB file share used as a voting member in the Node Majority strategy, replacing the need for a dedicated witness node.

The file share must be located outside the StarWind nodes and accessible by both nodes using a service account with write permissions.

Configuration: Create a shared folder on a separate server or device (not on the StarWind nodes) and configure it as an SMB share.

Ensure the folder is accessible from both StarWind nodes using a local or domain account with write permissions.

Use the CreateHASmbWitness.ps1 PowerShell script (found in C:\Program Files\StarWind Software\StarWind\StarWindX\Samples\powershell) to configure the FSW. Edit the script to specify the file share path, credentials, and synchronization interfaces for both nodes.

Run the script to integrate the FSW with the HA device.

Example: For a two-node HA device, the FSW provides the third vote, ensuring quorum if one node fails.

Requirements: The file share must be hosted on a separate system (e.g., a domain-joined Windows server or any device supporting SMB 2 or later for Windows Server 2019 clusters).

The share must be highly available to avoid becoming a single point of failure. It’s recommended to place the FSW in a separate location from the cluster nodes.

Minimal storage is required (e.g., a small folder for voting metadata).

Use Case: Ideal for environments where a third server is not available, but a reliable SMB share can be configured (e.g., on an existing file server or NAS).

Suitable for cost-conscious setups that want to avoid the overhead of a dedicated witness node

Advantages:

- Reduces hardware costs by leveraging existing infrastructure (e.g., a file server).

- Simplifies deployment in environments with limited resources for additional nodes.

If the FSW goes offline, the cluster can still operate as long as the two nodes remain connected, as the FSW is only a voting member and not the quorum itself.

Limitations:

- Requires a separate, reliable SMB share, which introduces dependency on external infrastructure.

- The FSW must be carefully secured and monitored to ensure accessibility and prevent unauthorized access.

Less integrated with StarWind’s ecosystem compared to a Node Witness, as it relies on external SMB services.

Comparison of Failover Strategies

|

Feature

|

Heartbeat

|

Node Majority (Node Witness)

|

Node Majority (File Share Witness)

|

|---|---|---|---|

|

Witness Required

|

No

|

Yes (Node Witness)

|

Yes (File Share Witness)

|

|

Nodes Supported

|

2 nodes

|

2 nodes + witness or 3 nodes

|

2 nodes + SMB share

|

|

Split-Brain Prevention

|

Via heartbeat links

|

Via majority voting

|

Via majority voting

|

|

Single-Node Operation

|

Yes

|

No (requires majority)

|

No (requires majority)

|

|

Network Requirements

|

Multiple heartbeat links (1 Gbps+)

|

1 Gbps+ for synchronization

|

1 Gbps+ for synchronization + SMB

|

|

Complexity

|

Lower (no witness setup)

|

Higher (requires witness node)

|

Moderate (requires SMB share)

|

|

Cost

|

Lower (no additional hardware)

|

Higher (witness node)

|

Moderate (uses existing SMB share)

|

|

Tolerates

|

One node failure

|

One node failure

|

One node failure

|

|

Use Case

|

Cost-effective 2-node setups

|

Strict quorum, 3-node setups

|

2-node setups with existing SMB share

|

Choosing the Right Strategy

Choose Heartbeat if:

- You have a two-node setup and want to minimize costs and complexity.

- You can ensure reliable network connectivity with multiple heartbeat links.

- High availability with single-node operation is a priority, and you can mitigate power failure risks (e.g., with UPS).

Choose Node Majority with Node Witness if:

- You need strict data consistency and want to eliminate split-brain risks entirely.

- You have resources for a third server (physical or virtual) to act as a witness.

- You’re deploying a three-node cluster, where a witness is not required.

Choose Node Majority with File Share Witness if:

- You lack a third server but have access to a reliable SMB share (e.g., on a file server or NAS).

- You want a cost-effective quorum solution without dedicated hardware.

- You can ensure the file share is highly available and secure.

Additional Considerations

Network Design: For Heartbeat, use separate network adapters for synchronization and heartbeat to avoid single points of failure. For Node Majority, ensure the witness (node or file share) is on a separate subnet or location for resilience.

Synchronization Journals: Both strategies support RAM-based (faster but volatile) or disk-based (more resilient) journals. Disk-based journals require 2 MB per 1 TB of HA device size for two-way replication (4 MB for three-way). Place journals on fast disks (SSD/NVMe) separate from the StarWind device to optimize performance.

Performance: Heartbeat may offer better performance in two-node setups due to its ability to operate with a single node, but Node Majority ensures stricter consistency, which may be critical for applications like SQL Server.

Cluster Quorum: In Node Majority, the witness (node or file share) acts as a voting member to maintain quorum, not as the quorum itself. If the witness is lost, the cluster can still function as long as the two nodes remain connected.

Final Words

Heartbeat is best for cost-effective, two-node setups where single-node operation is acceptable, and network reliability can be ensured with multiple heartbeat links.

Node Majority with Node Witness suits environments with three nodes or where a dedicated witness server is available, prioritizing data consistency.

Node Majority with File Share Witness is ideal for two-node setups with access to an existing SMB share, balancing cost and quorum requirements.

For detailed configuration steps, refer to StarWind’s official documentation or contact their technical support for assistance. If you’re deploying in a specific environment (e.g., Hyper-V, vSphere), StarWind provides tailored guides for setting up these failover strategies. Always make sure that your network and power infrastructure are robust to support the chosen strategy, and consider consulting StarWind’s support for complex deployments.

Trial Download StarWind VSAN here.

More posts about StarWind on ESX Virtualization:

- FREE version of StarWind VSAN vs Trial of Full version

- Installation of StarWind VSAN Plugin for vSphere

- StarWind VSAN with new UI and deployment options

- Backup Appliance with NVMe Speed and GRAID – StarWind Backup Appliance

- Exploring StarWind VSAN: High Availability, Cost Savings, and Performance

- StarWind V2V Converter The Cutting-Edge Upgrade: StarWind V2V Converter’s April 2024 Innovations

- What is StarWind Tape Redirector (FREE) and what’s the benefits?

- 5 Easy Steps to be more resilient with Two Hosts only – StarWind VSAN

- How StarWind VSAN solution can save you money and energy in ROBO environments

- 2-Nodes clusters without Witness – StarWind VSAN Heartbeat Failover Strategy

- You can’t extend backup window – Check NVMe Backup Appliance from StarWind

- Replacing Aging Hardware SAN Device by a Software – StarWind VSAN

- StarWind V2V Converter (PV2 Migrator) FREE utility

- Cluster with 2-Nodes only – How about quorum?

- StarWind VSAN Latest update allows faster synchronization with storing synchronization journals on separate storage

- How to Update StarWind VSAN for VMware on Linux- Follow UP

- StarWind SAN & NAS software details for VMware and Hyper-V

- Free StarWind iSCSI accelerator download

- VMware vSphere and HyperConverged 2-Node Scenario from StarWind – Step By Step(Opens in a new browser tab)

- How To Create NVMe-Of Target With StarWind VSAN

- Veeam 3-2-1 Backup Rule Now With Starwind VTL

- StarWind and Highly Available NFS

- StarWind VSAN on 3 ESXi Nodes detailed setup

- VMware VSAN Ready Nodes in StarWind HyperConverged Appliance

More posts from ESX Virtualization:

- VMware ESXi FREE is FREE Again!

- Two New VMware Certified Professional Certifications for VMware administrators: VCP-VVF and VCP-VCF

- Patching ESXi Without Reboot – ESXi Live Patch – Yes, since ESXi 8.0 U3

- Update ESXi Host to the latest ESXi 8.0U3b without vCenter

- Upgrade your VMware VCSA to the latest VCSA 8 U3b – latest security patches and bug fixes

- VMware vSphere 8.0 U2 Released – ESXi 8.0 U2 and VCSA 8.0 U2 How to update

- What’s the purpose of those 17 virtual hard disks within VMware vCenter Server Appliance (VCSA) 8.0?

- VMware vSphere 8 Update 2 New Upgrade Process for vCenter Server details

- VMware vSAN 8 Update 2 with many enhancements announced during VMware Explore

- What’s New in VMware Virtual Hardware v21 and vSphere 8 Update 2?

- Homelab v 8.0

- vSphere 8.0 Page

- ESXi 7.x to 8.x upgrade scenarios

- VMware vCenter Server 7.03 U3g – Download and patch

- Upgrade VMware ESXi to 7.0 U3 via command line

- VMware vCenter Server 7.0 U3e released – another maintenance release fixing vSphere with Tanzu

- What is The Difference between VMware vSphere, ESXi and vCenter

Leave a Reply